Back in graduate school, I came across a short PLoS Computational Biology paper by William Stafford Noble called A Quick Guide to Organizing Computational Biology Projects (2009). It was only a few pages long, but it changed how I think about organizing any kind of technical project, even now. Noble opens the paper with two deceptively simple principles:

- Someone unfamiliar with your project should be able to look at your files and understand what you did and why.

- Everything you do, you will probably have to do again.

He proposed a clean, straightforward structure. Many software projects already used something similar, but applying it to research work was new.

projectroot/

data/

results/

src/

bin/

doc/

Each directory had a clear purpose. Raw data stayed untouched in data/, processed results went into results/, scripts lived in src/, and notes or manuscripts belonged in doc/. Simple, but effective. He even suggested naming results chronologically (191025_experiment1) so the story of your analysis unfolded naturally over time. It felt tedious to set up at first, but within a few weeks, the structure made sense. It paid off later. Even now, I can open a project from ten years ago and understand what I did, what the files mean, and how everything fits together. Writing papers or reproducing experiments stopped being painful. It wasn’t about being tidy it was about being clear, transparent, and kind to my future self.

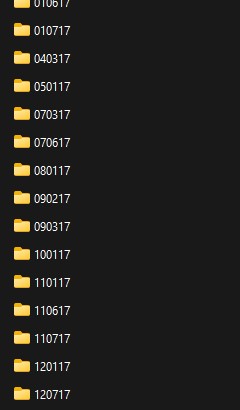

Here is an example of my experiments from my grad school days (this is one structure):

Years later, when I moved into data science, I realized Noble’s ideas weren’t just for bioinformatics. They apply everywhere. Most of my projects, even personal ones, still start with the same basic structure whether I’m working with business data, product metrics, or machine learning pipelines. I’ve expanded it over time. Now there’s version control through Git, and each folder has a markdown file or a plain text file where I keep notes and context.

date_project_name/

config/ #for configuration files

.venv/ #for the virtual environment

data/raw/

data/processed/version/

results/date/explanation/tables/

results/date/explanation/plots/

results/date/explanation/aggregates/

results/date/markdown/writeups/

src/deprecated/

src/experiment_version_date_reason.ipynb #notebooks to store results, create a new one if you are replacing something inside or store it as a different file with results inside

src/scripts/

README.md

pyproject.toml #for the poetry dependicies

logs.txt #try to capture your print statements and states

Data science projects follow the same logic. We think in terms of experiments, data, and iteration, so the structure should reflect that. Raw data is shared across roles: analysts, engineers, and stakeholders all touch the same files. A consistent structure, predictable directories, reproducible scripts, and clear documentation save time, reduce confusion, and make collaboration easier. What still sticks with me most is the mindset behind it. It’s not about rigid rules or perfectionism. It’s about assuming that your future self, or someone else, will one day have to make sense of your work without you there to explain it. That’s the real definition of reproducibility. Even now, when I open a new repo or an empty folder, I still think of that small paper because it taught me one of the biggest lessons of my career.

Here is an example of a script to build the structure, fill the gaps and it is safer to run multiple times, if the directory already exists, python won’t stop the script. it’ll move on and create any missing subfolders.

import os

from datetime import date

explanation = "initial_experiment"

today = date.today().strftime("%Y_%m_%d")

project_name = f"{today}_example_project"

folders = [

"config",

"data/raw",

"data/processed/version",

f"results/{today}/{explanation}/tables",

f"results/{today}/{explanation}/plots",

f"results/{today}/{explanation}/aggregates",

f"results/{today}/{explanation}/markdown/writeups",

"src/deprecated",

"src/scripts"

]

os.makedirs(project_name, exist_ok=True)

for f in folders:

path = os.path.join(project_name, f)

os.makedirs(path, exist_ok=True)

open(os.path.join(project_name, "README.md"), "a").close()

open(os.path.join(project_name, "logs.txt"), "a").close()

print(f"Project structure created under: {project_name}")

Reference:

Noble, W. S. (2009). A Quick Guide to Organizing Computational Biology Projects. PLoS Computational Biology, 5(7): e1000424.

Read the original paper on PLoS Computational Biology

Visit William Stafford Noble’s homepage